What hiring leaders need to know heading into 2026

A couple of years ago, most hiring leaders were still asking whether they should use AI.

Now the question is very different.

Almost everyone already is.

CV screening, chatbots, automated assessments, ranking tools – AI has quietly embedded itself into early-stage hiring workflows. The World Economic Forum estimates that close to 90% of employers now use some form of AI in hiring, often without giving too much thought to what’s actually happening under the hood.

And at the same time, something else has shifted. Regulators, candidates, and boards are no longer asking whether AI saves time. They’re asking harder questions:

– Can we explain this?

– Is it fair?

– Would we defend it if we had to?

That’s the real change. AI in hiring has moved beyond efficiency. It’s becoming a governance issue.

The questions I’m hearing again and again

Over the past 12 months, I’ve noticed a pattern in almost every conversation with large employers.

The discussion rarely starts with features anymore. It starts with risk.

– Are we even allowed to use this AI?

– What happens if a candidate challenges a decision?

– Can we explain the system in plain English to legal, procurement, or a regulator?

Some of this is being driven by regulation – New York City’s Local Law 144, the EU AI Act, and similar frameworks elsewhere. But a lot of it is buyer behaviour.

Legal teams, procurement, AI committees, and boards are now involved in AI decisions in a way they simply weren’t two years ago.

In other words, hiring technology is no longer being judged just on speed. It’s being judged on whether it’s defensible.

The real distinction that suddenly matters

As scrutiny grows, one distinction matters more than almost anything else:

Are you using AI that hides its logic, or AI that shows its working?

That’s the line between black-box screening and compliant AI.

What people usually mean by “black-box”

Most hiring leaders don’t use the term black-box, but they recognise it instantly.

These are systems that produce a score or a ranking without much visibility into how it was reached. Candidates are rejected with little explanation. Recruiters can’t easily see which factors mattered most. Over time, the output starts to feel like a decision, even if it’s technically labelled a “recommendation”.

The issue isn’t automation. It’s opacity.

When no one can clearly explain how a system works, it becomes impossible to defend. That’s where legal, ethical, and reputational risk starts to build, often quietly, until something goes wrong.

What compliant AI looks like in practice

Compliant AI works very differently.

Candidates are informed when AI is involved. They understand what’s being assessed. Recruiters can see how recommendations are generated and why. The system can be audited. And crucially, humans remain responsible for the final decision.

These systems also stay focused on what actually matters for the role: skills, experience, qualifications, not speculative signals like personality profiling or vague notions of “culture fit”.

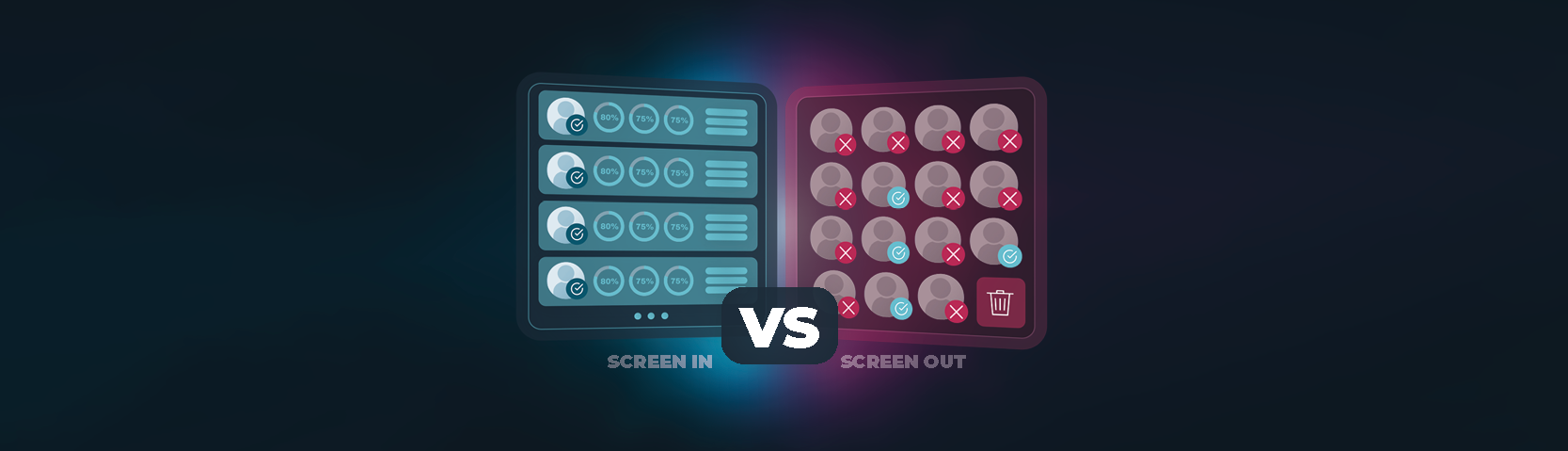

One design principle matters more than most: screening people in, not out.

Instead of rejecting candidates early based on thin data, compliant systems gather more signal. They ask follow-up questions. They identify gaps. They give people a chance to show what they can actually do.

For candidates, the experience feels fairer and more transparent.

For employers, it’s far easier to stand behind.

A quick mental check

If you’re using AI in hiring heading into 2026, there are a few questions you should be able to answer without hesitation:

– Are candidates clearly informed when AI is used?

– Can we explain the logic of the system in plain English?

– Can recruiters see and audit how recommendations are made?

– Is there independent evidence of fairness and bias testing?

– Do humans always make the final decision?

If any of those feel uncomfortable, that’s a signal worth paying attention to. Not because the technology is bad, but because the environment around it isn’t ready.

How we’ve been thinking about this at VONQ

This shift forced us to get very honest internally.

Not about what AI can do, but about what it shouldn’t do.

One line we kept coming back to was this: the moment AI starts making hiring decisions instead of supporting them, we’ve crossed a line. Not just ethically, but practically. Because if a system can’t be explained, it can’t be defended. And if it can’t be defended, it won’t survive long in an enterprise environment.

So we made a deliberate choice.

AI should augment human judgement, not replace it.

Its role is to reduce noise, surface signal, and take on the work humans shouldn’t have to do, not to outsource accountability.

That thinking shaped how we built EQO.

Instead of a single opaque model operating in the background, we designed specialised AI agents to support specific parts of the process: screening, interviewing, assessment, scoring, always inside existing ATS workflows and always with humans firmly in the loop.

The AI structures information, asks follow-up questions when data is missing, highlights patterns, and surfaces what matters. Recruiters still decide.

We also took a strong stance on something that doesn’t get talked about enough: bringing more signal in, rather than filtering people out faster.

Most hiring technology is optimised for speed of rejection. Ours is optimised for quality of understanding. If something’s unclear, the system probes. If potential is there but under-evidenced, it asks.

The goal isn’t speed at all costs. It’s better decisions, with less guesswork.

That design choice wasn’t philosophical. It was practical.

The more explainable a system is, the easier it is to govern. And governance is now part of the buying decision, whether vendors like it or not.

That’s also why we chose to put EQO through independent bias and fairness audits early, even when it was uncomfortable and time-consuming. Not because it makes for good marketing, but because this is the level of scrutiny we see employers increasingly being held to.

If AI is going to sit anywhere near hiring decisions, “trust us” is no longer a sufficient answer.

Where this is all heading

Hiring will never be fully human again and that’s progress, not loss.

What dehumanises hiring isn’t AI. It’s volume, cognitive overload, and opaque systems no one can explain. When recruiters are forced to skim hundreds of CVs under pressure, bias creeps in; it doesn’t disappear.

Applied responsibly, AI can actually make hiring more human: more consistent, more transparent, more explainable.

That’s why the winners will be organisations that move faster by embracing responsible AI early in the hiring process. In this next phase of hiring, speed, clarity, and trust are no longer nice-to-haves; they’re genuine competitive advantages.

The systems that win over the next few years won’t be the most impressive demos. They’ll be the ones that fit into real organisations, scale responsibly, and can be defended with a straight face when someone asks: “Can you explain how this works?”