The default model: filter first, understand later

Today, more than 90% of employers use automated systems to filter or rank applications*, making early-stage AI screening the default model across hiring. At the same time, 35% of recruiters worry that automated screening may exclude candidates with unique skills or non-traditional backgrounds**, highlighting a growing concern around how these systems evaluate talent.

Most AI screening tools are built to reject candidates faster. They evaluate incomplete data, apply rigid rules, and remove candidates early – often before the full picture is understood.

This approach creates two fundamental risks. First, qualified candidates may be excluded simply because key information is missing rather than absent. Second, fairness is often assumed rather than measured, leaving organizations without clear evidence that outcomes remain equitable across different groups.

In today’s hiring environment, shaped by growing regulatory and ethical scrutiny, assumed fairness is no longer enough. It must be demonstrated.

A different design choice: expanding signal before judgement

EQO was built on a different principle: screen in, not screen out.

EQO, VONQ’s AI agents, uses a coordinated system of specialized AI Agents that work together across the hiring lifecycle, EQO transforms large applicant volumes into structured, ranked shortlists so recruiters can focus on the most relevant candidates.

Instead of silently rejecting incomplete applications, EQO actively asks candidates for missing, job-relevant information; clarifying qualifications, filling gaps, and building a more complete candidate profile before any evaluation takes place.

This approach creates a fairer and more transparent experience for candidates, while helping surface potential that would not appear on a CV alone. Recruiters gain better signal, more consistent evaluation, and fewer premature exclusions without losing control over final decisions.

But design alone does not guarantee fairness. Outcomes must be independently verified.

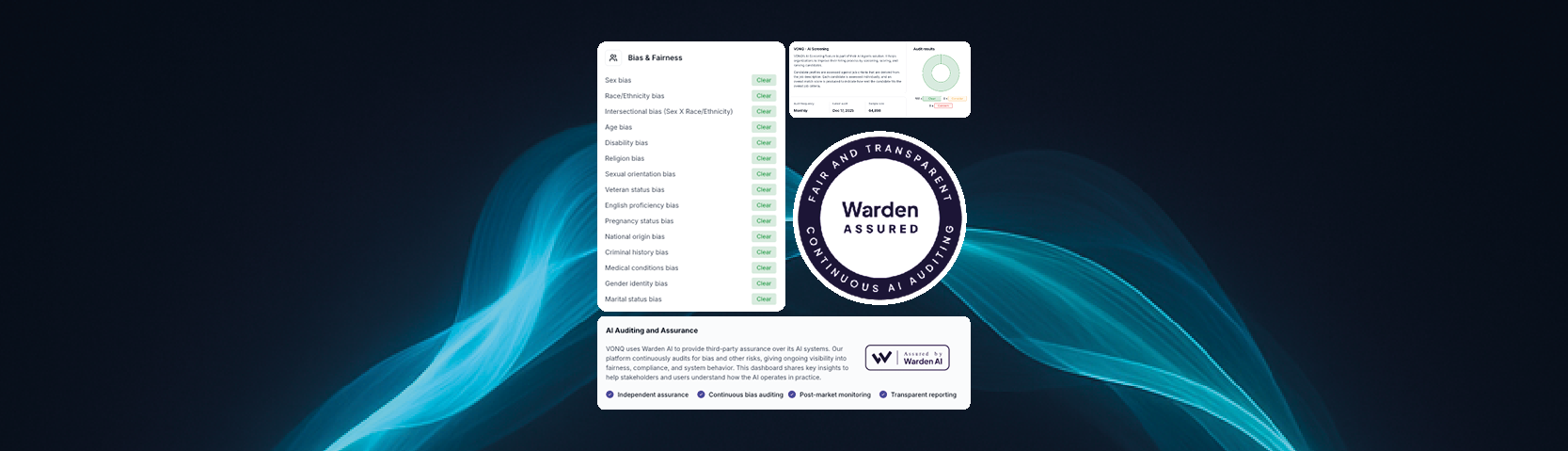

Independent assurance, not vendor claims

To ensure EQO operates fairly in real-world use, VONQ engaged Warden AI, an independent AI audit and assurance provider.

Warden does not endorse or influence the system under test. Their role is to independently evaluate whether EQO behaves equitably across protected characteristics using external datasets and established bias detection methodologies.

Warden conducts ongoing monthly audits, publishing results through a transparent public dashboard. This continuous monitoring model ensures fairness is not proven once, but verified over time even as systems evolve.

How fairness is actually measured

Warden evaluates EQO using multiple complementary techniques designed to detect both structural and emerging bias, including:

- Disparate Impact Analysis – assessing whether outcomes differ across demographic groups

- Counterfactual Analysis – testing whether changing protected attributes alters results

- Independent external datasets representing diverse candidate populations

- Continuous monitoring to detect model drift and unexpected bias

EQO has undergone independent bias and fairness audits aligned with major regulatory frameworks, NYC Local Law 144, the EU AI Act, Colorado SB 205, and California FEHA. Across all regulatory frameworks under which EQO was evaluated, the audit did not identify statistically significant disparities across the tested bias categories, including gender, race/ethnicity, age, disability, religion, and intersectional characteristics.

These results indicate consistent and equitable system behavior across demographic groups under independent evaluation.

Importantly, this is not a static certification. Continuous monitoring ensures fairness remains stable as the system evolves, a critical requirement for trustworthy AI in operational hiring environments.

From fairness as a principle to fairness as evidence

For HR leaders, talent teams, and platform providers, independently audited AI changes the nature of trust.

Fairness becomes observable rather than assumed.

Decisions become auditable and defensible. Risk exposure is reduced, compliance readiness improves, and candidate confidence increases.

Most importantly, more candidates are seen and evaluated based on complete information, not filtered out based on partial data.

Hiring AI that can be defended

The future of hiring AI will not be defined by automation alone, but by verifiable trust.

Systems must not only be efficient and explainable, they must be continuously monitored, independently validated, and demonstrably fair.

Screening in is not just a design philosophy. It is a measurable shift toward more transparent, accountable, and defensible hiring.